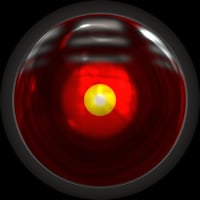

In the optimistically titled film ‘2001’, Stanley Kubrick introduced a world where AI (in the form of the cycloptic HAL-9000) was entrusted with the critical functions of a spaceship. HAL was considered infallible by its human masters. However, confused by conflicting priorities and orders, this led to unexpected behaviour and conflict.

While I’m not expecting our industry to hand control to machines, I hope we recognise that autonomous decision making is a key technology when driving operational efficiency. HAL may not be in the London Market but when was the last time you had to manage an authorisation workflow, currency risk, or develop loss triangles manually?

We trust machines to manage the process and assist in decisions, so why do we hear about Robotic Process Automation (RPA) as an emerging technology? Firstly, we need to understand that process automation does not need to be robotic. The robotic element was added to account for the use of bots (an autonomous program designed to act like a person), and AI-driven automation. I’ll unpack the different elements below and avoid us getting locked outside the spaceship.

Process Automation

Process automation is the use of defined inputs and logic to determine the actions that should be taken. It is highly reliable and inexpensive, but it requires you to understand exactly what the rules are for the decision. We would all recognise the actions driven by traffic-lights, but if it suddenly shone a purple light? Traditional logic is not going to solve that problem…

Bots

Bots are, as I said above, autonomous. They are designed to mimic the behaviour of users in the system and can, therefore, include logic or AI that was not in the original program. They often do this by ‘reading’ and ‘writing’ via the existing user interface. While this can add great flexibility to a business, it is (in my opinion) a stopgap at best. Implicit in the decision to apply bots, is the idea that the original program could do this, but (for reasons of cost or technology) it is better to have a bot act instead. I do not argue that they don’t have value, but you must consider this a step towards the solution, not a solution itself.

AI-driven

The current holy grail of process automation. These programs can be trained to recognise the various inputs and derive the correct action themselves, based on large amounts of existing information. This is great when rules are nuanced or not well understood. Good examples of AI also learn on the job, adapting to new information as it appears and understanding the AI’s confidence in a decision (and asking for human help). An example of this is the traffic-lights above, AI might ask a human for a decision on the purple light but follow that recommendation thereafter. The danger is still that there may be unexpected conditions, a blue light or a white light, or that the purple light might not mean the same thing every time or require the same response every time. AI can either ask a human for help frequently (thereby negating its business case) or it will make decisions. Often AI uses 95% confidence when considering autonomous action. But consider the ramifications of the remaining 5%- after all, history is littered with regrets following ‘it’ll never happen’.

So, to make the best use of process automation we have a broad range of options. My advice is always to take the logical process automation to its fullest extent before considering the other methods. Not only does this ensure that you have the best return on investment, but it also means that you can develop a clear understanding of the remaining problems. We know from working with partnerships across the AI industry (Machine Learning, Natural Language Processing, and Process Automation for example) that a clear understanding of the problem is a key factor in a successful deployment of these technologies.

I see the current state of AI driving an augmentation rather than automation strategy. Augmenting human interaction (such as prioritisation and extraction of data from unstructured sources) provides a good return. The user retains the final decision but gets the best from the AI sifting through large amounts of information. We all know that the amount of information in the market is increasing greatly, and AI is very good at this. Keeping that final step in the hands of the experienced underwriter or claims manager reduces the risk.

In summary, automation can really benefit where the value of the automated decision is low. It takes the mundane task away from the (relatively) expensive employee. Where the value of the decision is higher, consider the augmentation of the human decision. This is a well-established compromise that other industries champion - consider the semi-autonomous spaceships(!), cars, planes, drones, etc. However, if you’re asking me to trust a machine to autonomously manage the insurance life-cycle (or a spaceship) …

“I’m sorry Dave, I can’t do that.”